Recently, there’s been buzz in the higher education industry about defining assessment and determining its value.

In November 2016, Erik Gilbert wrote an opinion piece for Inside Higher Ed called Why Assessment is a Waste of Time and in May 2017, Rishi Sriram published We Need Researchers…So Let’s Stop Using the Term Assessment in About Campus.

Allow me to give you my quick take on each of these before making some overarching points about assessment.

Those aware of my careers and other articles I’ve written might imagine that I’d have strong feelings about Gilbert’s stance that assessment is a waste of time. You might be surprised to know that, while I disagree with his article title, I don’t disagree with his main argument. He argues that assessment does not adhere to research standards. And while some assessments may follow research standards, I generally have no qualms conceding this point.

Why? Because assessment is not research.

I know some institutions and practitioners may conflate or equate the two, but these are two distinct practices which shouldn’t be labeled as anything other than what they are. And while it may seem that the lines are blurry between the two, differentiating assessment and research is possible… and necessary.

Sriram’s article encourages the higher ed field to stop using the term “assessment” and its associated practices, in favor of adopting a research paradigm. The intent behind this stance is good — namely, the belief that such a shift would elevate the importance, respect, and value associated with this work.

But this presumes that assessment is less important, respected, and valued than research. I’m not arguing that assessment is superior or even that they are equal – just that they’re different and determining the value or quality of each is subjective and circumstantial.

Both articles allude to similarities and associations between assessment and research. For each method, data is collected and analyzed, and documents are prepared with the intent to share information or inform practice. While those events occur in both workstreams, that’s about as close a comparison as one can make without differentiating one or the other. As such, it seems a tall task to compare or place value on either based on such general, objective standards.

I think this for two reasons.

First, quality and value are not easily defined or generalized in application (Zen and the Art of Motorcycle Maintenance, anyone?). The subjectivity of identity, plus perspective and situation, colors application. Sriram argues that scholars and researchers should “purposefully attempt to limit their biases” in design and result interpretation, while administrators and assessors “cannot help but think that a lack of desired results is a failure”.

Putting aside the notion that professionals and administrators doing assessment work cannot also be scholars, this stance is making some pretty big assumptions. It doesn’t seem to acknowledge that ACPA, NASPA, and CAS all have standards and guidelines for intentional assessment efforts to mitigate bias and take steps to ensure objectivity. It’s not fair or accurate to assume that researchers can follow such principles of practice but assessment professionals cannot.

Moreover, Sriram and Gilbert’s stances remind me of a particular us/them mentality that plagues the field of higher education. This same mentality can be seen when thinking of student success, as faculty may view student affairs as less important than academic affairs.

A student affairs professionals may argue the opposite when considering the one-dimensional classroom learning context for academics — versus the multi-dimensional holistic student learning and development offered via student affairs.

My point is not to argue either side, but rather to suggest that quality and value is more of a both/and than an either/or situation. We lose more from making judgments or comparisons in this way than we might gain from considering how both elements could independently or collaboratively function towards a common goal of student learning, development, or success.

Second, if you are going to do a comparison, you should not do so from one specific lens (such comparing merits of assessment and research by research standards). That’d be like comparing hot dogs to hamburgers by hamburger standards. Not fair.

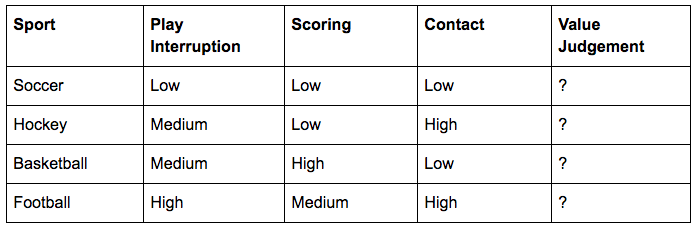

Furthermore, even if you try an objective set of standards, it can be difficult to assign value to different things. Consider my arbitrary example below in trying to determine the value of four different sports by the amount of play interruption, scoring, and contact.

Given the above data, which sport has the most value: football (most high scores), soccer (most low scores), hockey or basketball (diverse mix of scores)?

Perhaps it’d be easier to determine value if we used other dimensions, but how might we determine the right combination from each sport’s governing organization or rules of engagement? See how tricky this can be? Now imagine if we evaluated the merits of all these sports based solely on the norms and standards of one of the sports.

And it’s not as if assessment has to be compared to research standards due to any scarcity of frameworks for comparison. Assessment has multiple standards, guidelines, and outcome frameworks for which to be evaluated or grounded. Below are just some assessment-related standards for student affairs:

- Assessment Skills & Knowledge [ASK] Standards

- Council for Advancement of Standards in Higher Education [CAS] – Assessment Services Standards and Guidelines

- NASPA and ACPA Professional Competency Areas – Assessment, Evaluation, and Research

Even if we did keep assessment and research on the same playing field, research isn’t perfect either. Gilbert lambasts assessment as “meaningless” since it doesn’t follow a research protocol.

In episode 32 of NPR’s Hidden Brain podcast, standard research and scientific processes were critically examined. It speaks of a study published in 2015 wherein dozens of psychological studies were attempted to be reproduced. Less than half of these studies were able to be successfully reproduced across five different criteria evaluating successful replication, thus reducing credibility of the studies and research processes overall. The podcast episode goes on to report on foundational research studies exposed as being unreproducible — which prompted widespread criticism, accusations of fraud, and criticism of research being too easy to manipulate.

Having coordinated research projects, accreditation work, review processes, academic program assessment, general education assessment, and university-level assessment, I understand how these are all separate efforts and valuable to constituents for various reasons. I can assure you that when done wrong or without stakeholder considerations and needs in mind driving the process, all of the above can seem meaningless or not add value to one’s work or environment.

I can also assure you that hearts and minds can be changed when we have clear understandings of the purpose and intention of the work. Sometimes it takes rebranding or resetting expectations, as pointed out by Rachel Ebner in Rebranding Student Learning Assessment. The idea of reclassifying assessment may seem similar to Sriram’s stance, but the difference is that Ebner sees the inherent value of assessment as always being there.

Consider academic program assessment as an example. This work should be done to measure student learning and use findings to improve the learning experience for students. It can be perceived as another form of faculty performance evaluation, resulting in faculty distrust, defensiveness, and lack of desire to willingly participate in the process. However, if intent is clarified and if you appeal to the faculty’s desire to provide learning opportunities for students, there’s a clear value proposition to have data validating how effective learning is occurring.

Does academic assessment always have a large sample size, go through IRB approval, or follow the scientific process? No. Does that mean it doesn’t have value or meaning in quantifying student learning and experience? Absolutely not! Even a single student’s feedback could help a faculty member to strengthen their curricula or classroom environment in an effort to improve student learning.

I’m not saying one student’s voice should always necessitate change, but it shouldn’t be discredited simply because it may not have been heard via a more formal or research-oriented means.

Assessment is more of an art than a science, and it’s an art in which I love collaborating with faculty, staff, students, and stakeholders to produce the most welcoming, accommodating, and stimulating environment for students.

How do you understand the difference between assessment and research? Tweet us @themoderncampus and @JoeBooksLevy.